The Kinovis studio was recently extended to perform real-time 3D reconstruction. The previous version of the platform, Grimage, did this, but at a much smaller scale (up to 12 cameras). Kinovis replaces the algorithm based on EPVH with a more scalable version based on QuickCSG.

Each of the 60 cameras streams its video to one of the 17 acquisition machines. The machine extracts the silhouette locally and computes its contour. Then a 3-stage algorithm (with 2 n-to-n synchronization points) produces a portion of the result on each of the acquisition machines. The data is centralized on the host that renders the result. The reconstruction takes between 40 and 100 ms for 30k-120k output triangles, and the total latency including image capture, 2D operations and rendering is 150-200 ms at 15-20 fps.

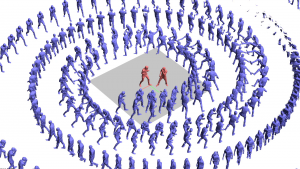

The reconstruction area is quite big in Kinovis, and QuickCSG can reconstruct outside the intersection volume of the camera’s fields of view. Therefore the interaction between two (or more) people can be rendered:

There is no reason that a mesh should be thrown away after it is displayed. They can also be kept or animated in funny ways. In the following videos, the red figure is the current/newest mesh. The other ones are old meshes that are either displayed in their normal location (in green), propagated through a spiral (violet) or shown around the central figure with 1 s, 2 s, …, 8 s latency (cyan).

Currently the reconstruction is not perfect, mainly because of invalid contour polygons that self-intersect. Also, very important, the textures (which are available from the RGB cameras) are not rendered. This is tricky because the amount of textures that is produced on the input cameras is about 1.6 GB / second, and this cannot be centralized on the 10GBps interface of the rendering machine. Therefore, clever adaptive texture compression techniques must be used to reduce this to a more manageable size without impacting the quality of the result. Both issues will be be the subject of further research in the coming months.

Videos by Thomas, Mickael and Matthijs.